Table of Contents

Welcome to the SoilWise Technical Documentation!

SoilWise Technical Documentation currently consists of the following sections:

- Technical Components

- Interfaces

- Infrastructure

- Glossary

- Printable version - where you find all sections composed in one page, that can be easily printed using Web Browser options

Essential Terminology

A full list of terms used within this Technical Documentation can be found in the Glossary. The most essential ones are defined as follows:

- (Descriptive) metadata: Summary information describing digital objects such as datasets and knowledge resources.

-

Metadata record: An entry in e.g. a catalogue or abstracting and indexing service with summary information about a digital object.

-

Data: A collection of discrete or continuous values that convey information, describing the quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted formally (Wikipedia).

-

Dataset: (Also: Data set) A collection of data (Wikipedia).

-

Knowledge: Facts, information, and skills acquired through experience or education; the theoretical or practical understanding of a subject. SoilWise mainly considers explicit knowledge -- Information that is easily articulated, codified, stored, and accessed. E.g. via books, web sites, or databases. It does not include implicit knowledge (information transferable via skills) nor tacit knowledge (gained via personal experiences and individual contexts). Explicit knowledge can be further divided into semantic and structural knowledge:

- Semantic knowledge: Also known as declarative knowledge, refers to knowledge about facts, meanings, concepts, and relationships. It is the understanding of the world around us, conveyed through language. Semantic knowledge answers the "What?" question about facts and concepts.

- Structural knowledge: Knowledge about the organisation and interrelationships among pieces of information. It is about understanding how different pieces of information are interconnected. Structural knowledge explains the "How?" and "Why?" regarding the organisation and relationships among facts and concepts.

- Knowledge resource: A digital object, such as a document, a web page, or a database, that holds relevant explicit knowledge.

Release notes

| Date | Action |

|---|---|

| 27. 2. 2025 | v2.1 Released: For D2.2 Developed & Integrated DM components, v2 D3.2 Developed & Integrated KM components, v2 and D4.2 Repository infrastructure, components and APIs, v2 purposes |

| 26. 2. 2025 | Link liveliness assessment tool updated |

| 25. 2. 2025 | Metadata Validation updated |

| 20. 2. 2025 | Knowledge Graph component updated |

| 19. 2. 2025 | Apache Solr component added |

| 19. 2. 2025 | Storage updated |

| 19. 2. 2025 | Catalogue updated |

| 14. 2. 2025 | Metadata Validation updated |

| 13. 2. 2025 | Metadata Augmentation updated |

| 7. 2. 2025 | Interfaces description updated |

| 30. 9. 2024 | v2.0 Released: For D2.1 Developed & Integrated DM components, v1 D3.1 Developed & Integrated KM components, v1 and D4.1 Repository infrastructure, components and APIs, v1 purposes |

| 30. 9. 2024 | Technical Components functionality updated according to first SoilWise repository prototype |

| 27. 8. 2024 | APIs section restructured |

| 20. 8. 2024 | Knowledge Graph component added |

| 13. 8. 2024 | Metadata Authoring component added |

| 1. 7. 2024 | Metadata Augmentation component added |

| 30. 4. 2024 | v1.0 Released: For D1.3 Architecture Repository v1 purposes |

| 27. 3. 2024 | Technical Components restructured according to the architecture from Brugges Technical Meeting |

| 27. 3. 2024 | v0.1 Released: Technical documentation based on the Consolidated architecture |

| 10. 2. 2024 | Technical Documentation was initialized |

Technical Components

Introduction

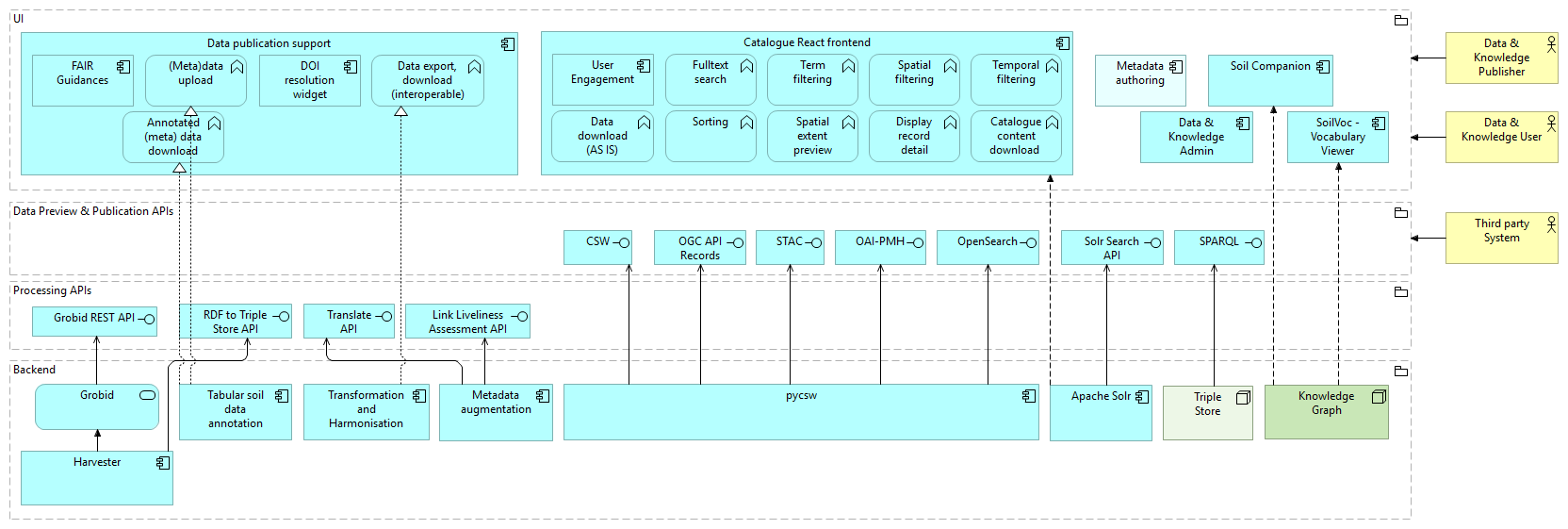

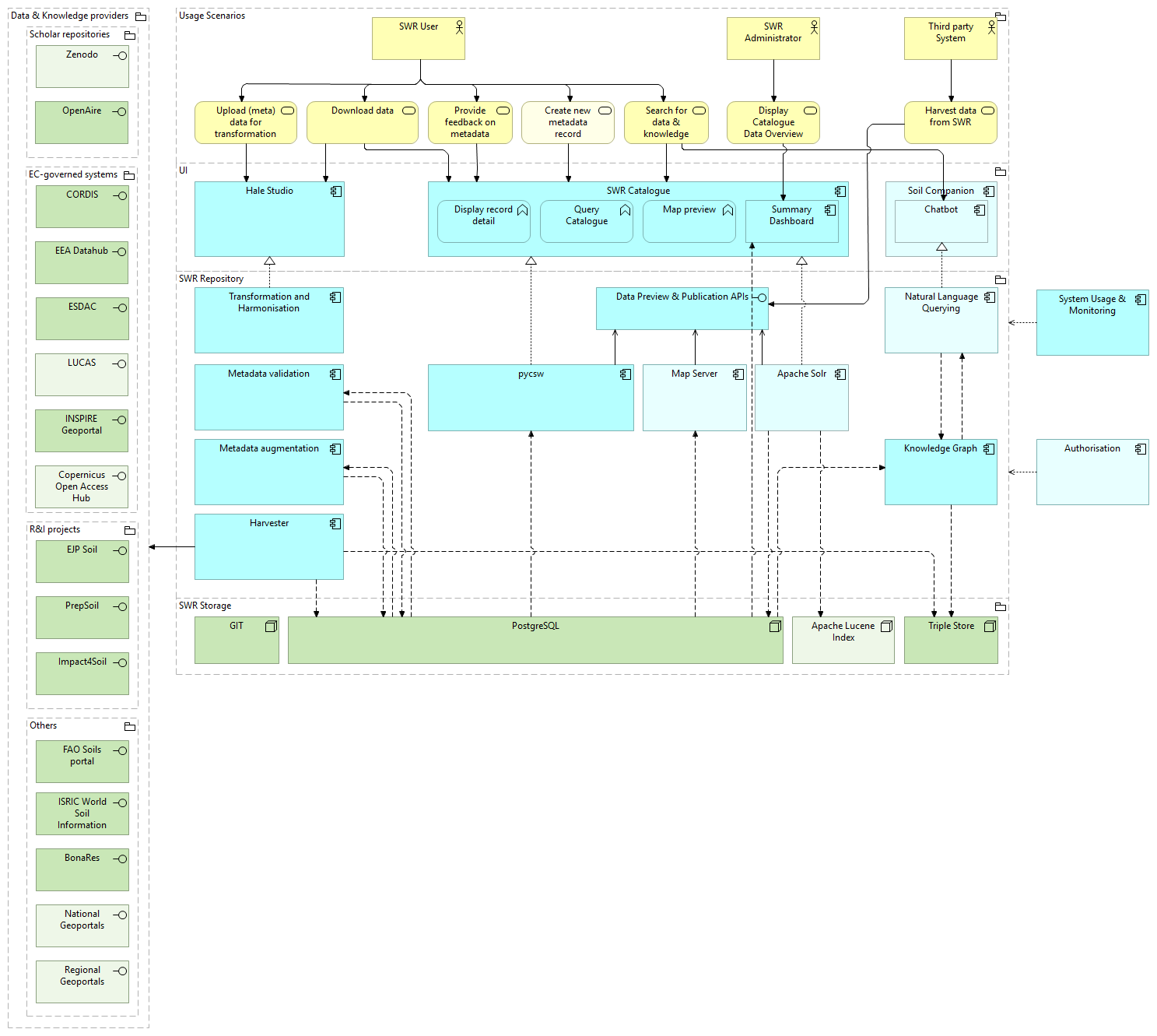

The SoilWise Catalogue (SWC) architecture aims towards efficient facilitation of soil data & knowledge management. It seamlessly gathers, processes, and disseminates data from diverse sources. The system prioritizes high-quality data dissemination, knowledge extraction and interoperability while user management and monitoring tools ensure secure access and system health. Note that, SWC primarily serves to power Decision Support Systems (DSS) rather than being a DSS itself.

The presented architecture represents an outlook and a framework for ongoing SoilWise development. As such, the implementation has been following intrinsic (within the SoilWise project) and extrinsic (e.g. EUSO development Mission Soil Projects) opportunities and limitations. The presented architecture corresponds to the current state of third SoilWise prototype delivery. Modifications during the implementation will be incorporated into the final version of the SoilWise architecture due M42.

This section lists technical components for building the SoilWise Catalogue as forseen in the architecture design:

- Harvester

- Repository Storage

- Metadata Catalogue

- Soil Companion (Chatbot)

- Data publication support

- Metadata Validation

- Metadata Augmentation

- Knowledge Graph

- Data & Knowledge Administration Console

- User Management and Access Control

- System & Usage Monitoring

Fig. 1: A high-level overview of SoilWise Repository architecture

Fig. 1: A high-level overview of SoilWise Repository architecture

A full version of architecture diagram is available at: https://soilwise-he.github.io/soilwise-architecture/.

Harvester

Info

Current version: 0.2.0

Technology: Git pipelines

Release: https://doi.org/10.5281/zenodo.14923563

Project: Harvesters

The Harvester component is dedicated to automatically harvest sources to populate SWR with metadata on datasets and knowledge sources.

Introduction

Overview and Scope

Metadata harvesting is the process of ingesting metadata, i.e. evidence on data and knowledge, from remote sources and storing it locally in the catalogue for fast searching. It is a scheduled process, so local copy and remote metadata are kept aligned. Various components exist which are able to harvest metadata from various (standardised) API's. SoilWise aims to use existing components where available.

The harvesting mechanism relies on the concept of a universally unique identifier (UUID) or unique resource identifier (URI) that is being assigned commonly by metadata creator or publisher. Another important concept behind the harvesting is the last change date. Every time a metadata record is changed, the last change date is updated. Just storing this parameter and comparing it with a new one allows any system to find out if the metadata record has been modified since last update. An exception is if metadata is removed remotely. SoilWise Repository can only derive that fact by harvesting the full remote content. Discussion is needed to understand if SWR should keep a copy of the remote source anyway, for archiving purposes. All metadata with an update date newer then last-identified successfull harvester run are extracted from remote location.

A harvesting task typically extracts records with update-date later then the last-identified successfull harvester run. In case the remote system supports such a filter, else the full set is harvested.

Local improvements to metadata records should be stored separately from the harvested content for the following reasons:

- The harvesting is periodic so any local change to harvested metadata will be lost during the next run.

- The change date may be used to keep track of changes so if the metadata gets changed, the harvesting mechanism may be compromised.

If inconsistencies with imported metadata are identified, we can add a statement to the graph of such inconsistencies. We can also notify the author of the inconsistency so they can fix the inconsistency on their side.

A governance aspect still under discussion is if harvested content is removed as soon as a harvester configuration is removed, or when records are removed from the remote endpoint. The risk of removing content is that relations within the graph are breached. An alternative is to indicate the record has been archived by the provider.

On top of a unique identification, SWR also captures a unique calculated string (a hash) for the harvested content. This allows to identify changes even if the update date has not changed.

Typical tasks of a harvester:

- Define a harvester job

- Schedule (on request, weekly, daily, hourly)

- Endpoint / Endpoint type (example.com/csw -> OGC:CSW)

- Apply a filter (only records with keyword='soil-mission')

- Understand success of a harvest job

- overview of harvested content (120 records)

- which runs failed, why? (today failed -> log, yesterday successfull -> log)

- Monitor running harvestors (20% done -> cancel)

- Define behaviours on harvested content

- skip records with low quality (if test xxx fails)

- mint identifier if missing ( https://example.com/data/{uuid} )

- a model transformation before ingestion ( example-transform.xsl / do-something.py )

Intended Audience

Harvester is a backend component, therefore we only expect a maintenance role:

- SWC Administrator monitoring the health status, logs... Administrators can manually start a specific harvesting pipelines.

Resource Types

Metadata for following resource types are foreseen to be harvested:

- Data & Knowledge Resources (datasets, services, software, documents, articles, videos)

- Organisations, Projects, LTE, Living labs initiatives

- News items from relevant websites

These entities relate to each other as:

flowchart LR

people -->|memberOf| o[organisations]

o -->|partnerIn| p[projects]

p -->|produce| d[data & knowledge resources]

o -->|publish| d

d -->|describedIn| c[catalogues]

p -->|part-of| fs[Fundingscheme]

Datasets

Metadata records of datasets are, for the first iteration, primarily imported from the ESDAC, INSPIRE GeoPortal, BonaRes, Cordis/OpenAire, ISRIC, FAO, and EEA. In later iterations SoilWise aims to include other projects and portals, such as national or thematic portals. These repositories contain large number of datasets. Selection of key datasets concerning the SoilWise scope is a subject of know-how to be developed within SoilWise.

Knowledge sources

Compared to datasets, knowledge sources are typically very heterogeneous (reports, articles, websites, video's) and collected in a variety of repositories. Soilwise endorses projects to use persistent repositories, with sufficient options for metadata capture. For the acadmic community for example, inclusion in OpenAire is a prerequisite to be included in SWR. This allows SWR to use the OpenAire functionalities to collect evidence about the resources.

The SoilWise project team is still exploring which knowledge resources to include. As an example, an important cluster of knowledge sources may be seen academic articles and report deliverables from Mission Soil Horizon Europe projects. These resources are accessible from Cordis and OpenAire, filteres by the grantnumber of the projects. Extracting content from Cordis and OpenAire can be achieved using a harvesting task (using the Cordis schema, extended with post processing). For the first iteration, SoilWise aims to achieve this goal. In future iterations new knowledge sources may become relevant, we will investigate at that moment what is the best approach to harvest them.

Projects and organisations

Project details are extracted from Cordis. Discussion is ongoing how to improve this process, for example to understand if projects should be included which do not have European funding.

Indivicuals and organisations are typically mentioned as contact, author or owner in metadata records, as well as participant or funder in projects.

A challenge for SWR is to understand the alignment between those individuals and organisations, to enable users to understand the relations between projects, organisations and resources.

News items

A need has been expressed to be informed about ongoing Soil Mission projects. For that reason a harvesting mechanism has been set up which extracts and aggregates from the various Soil Mission Project websites the news items published in their websites. A common protocol, RSS/Atom feeds, implemented by most of the project websites is used to extract that information. At the moment we are investigating if we can also extract anounced upcoming events, for example via the iCalendar protocol, but we already noticed that this protocol has vert little adoption.

Adoption of standards

With respect to harvesting, it is important to note that a wide range of levels of adoption of standards is implemented by repositories. Both for metadata models, identification, as well as access protocols. This will, in some cases, make it necessary to develop customized harvesting and metadata extraction processes. It also means that informed decisions need to be made on which resources to include, based on priority, required efforts and available capacity.

Key features

The Harvester component currently comprises of the following functions:

- Harvest records from metadata and knowledge resources

- Metadata Harmonization

- Metadata RDF Turtle Serialization

- RDF to Triple Store

- Duplication Identification

Harvest records from metadata and knowledge resources

Note, the second SoilWise Repository prototype contained 19,324 harvested metadata records (to date 14.2.2025).

CORDIS

European Research projects typically advertise their research outputs via Cordis. This makes Cordis a likely candidate to discover research outputs, such as reports, articles and datasets. Cordis does not capture many metadata properties. In those cases where a resource is identified by a DOI, additional metadata can be found in OpenAire via the DOI. The scope of projects, from which to include project deliverables is still under discussion.

Which projects to include is derived from 2 sources:

- ESDAC maintains a list of historic EU funded research projects

- Mission soil platform maintains a list of current Mission soil projects

A script fetches the content from these 2 sources and prepares relevant content for the CORDIS and OpenAire harvesting. The content in these pages is unstructured html. The content is scraped using a python library. This is not optimal, because the scraper expects a dedicated html structure, which is fragile.

Results of the scrape activity are stored in table harvest.projects. For each project a Record control number (RCN) is retrieved from the Cordis knowledge graph. This RCN could be used to filter OpenAire, however OpenAire can also be filtered using project grant number. At this moment in time the Cordis Knowledge graph does not contain the Mission Soil projects yet.

Currently we do not harvest resources from Cordis which do not have a DOI. This includes mainly progress reports of the projects.

OpenAire

For those resources, discovered via Cordis/ESDAC, and identified by a DOI, a harvester fetches additional metadata from OpenAire. OpenAire is a catalogue initiative which harvests metadata from popular scientific repositories, such as Zenodo, Dataverse, etc.

Not all DOI's registered in Cordis are available in OpenAire. OpenAire only lists resources with an open access license. Other DOI's can be fetched from the DOI registry directly or via Crossref.org. This work is still in preparation.

Records in OpenAire are stored in the Open Aire Research Graph (OAF) format, which is transformed to a metadata set based on Dublin Core.

OGC-CSW

Many (spatial) catalogues advertise their metadata via the catalogue Service for the Web standard, such as INSPIRE GeoPortal, Bonares, ISRIC. The OWSLib library is used to query records from CSW endpoints. A filter can be configured to retrieve subsets of the catalogue.

Incidentally, records advertised as CSW also include a DOI reference (Bonares/ISRIC). Additional metadata for these DOI's is extracted from OpenAire/Crossref.

INSPIRE

Although INSPIRE Geoportal does offer a CSW endpoint, due to a technical reasons, we have not been able to harvest from it. Instead we have developed a dedicated harvester via the Elastic Search API endpoint of the Geoportal. If at some point the technical issue has been resolved, use of the CSW harvest endpoint is favourable.

ESDAC

The ESDAC catalogue is an instance of Drupal CMS. We have developed a dedicated harvester to scrape html elements to extract Dublin Core metadata from ESDAC html elements. Metadata is extracted for datasets, maps (EUDASM) and documents. Incidentally a DOI is mentioned as part of the HTML, this DOI is then used as identifier for the resource, else the resource url is used as identifier. If the DOI is not known to the system yet, OpenAire will be queried to capture additional metadata on the resource.

Prepsoil portal

Prepsoil is build on a headless CMS. The CMS at times provides an API to retrieve datasets, knowledge items, living labs, lighthouses and communities of practice. The API provides minimal metadata, incidentally a DOI is included. DOI is used to capture additional metadata from OpenAire.

News feeds

From the project websites mentioned at https://mission-soil-platform.ec.europa.eu/project-hub/funded-projects-under-mission-soil a harvester algorythm fetches the contents of the RSS feed, if the website provides one. The harvested entries are stored on a database.

Metadata Harmonization

Once stored in the harvest sources database, a second process is triggered which harmonizes the sources to the desired metadata profile. These processes are split by design, to prevent that any failure in metadata processing would require to fetch remote content again.

Table below indicates the various source models supported

| source | platform |

|---|---|

| Dublin Core | Cordis |

| Extended Dublin core | ESDAC |

| Datacite | OpenAire, Zenodo, DOI |

| ISO19115:2005 | Bonares, INSPIRE |

Metadata is harmonised to a DCAT RDF representation.

For metadata harmonization some supporting modules are used, OWSlib is a module to parse various source metadata models, including iso19139:2007. A transformation script from semic-eu/iso19139-to-dcat-ap.xslt in combination with lxml and rdflib is used to convert iso19139:2007 metadata to RDF, serialised as turtle.

Harmonised metadata is either transformed to iso19139:2007 or Dublin Core and then ingested by the pycsw software, used to power the SoilWise Catalogue, using an automated process running at intervals. At this moment the pycsw catalogue software requires a dedicated database structure. This step converts the harmonised metadata database to that model. In next iterations we aim to remove this step and enable the catalogue to query the harmnised model directly.

Metadata Augmentation

The metadata augmentation processes are described elsewhere, what is relevant here is that the output of these processes is integrated in the harmonised metadata database.

Metadata RDF turtle serialization

The harmonised metadata model is based on the DCAT ontology. In this step the content of the database is written to RDF.

Harmonized metadata is transformed to RDF in preparation of being loaded into the triple store (see also Knowledge Graph).

RDF to Triple store

This is a component which on request can dump the content of the harmonised database as an RDF quad store. This service is requested at intervals by the triple store component. In a next iteration we aim to push the content to the triple store at intervals.

Duplication indentification

A resource can be described in multiple Catalogues, identified by a common identifier. Each of the harvested instances may contain duplicate, alternative or conflicting statements about the resource. SoilWise Repository aims to persist a copy of the harvested content (also to identify if the remote source has changed). For this iteration we store the first copy, and capture on what other platforms the record has been discovered. OpenAire already has a mechanism to indicate in which platforms a record has been discovered, this information is ingested as part of the harvest. An aim of this exercise is also to understand in which repositories a certain resource is advertised.

Visualization of source repositories is in the first development iteration available as a dedicated section in the SoilWise Catalogue.

Architecture

Technological Stack

| Technology | Description |

|---|---|

| Git actions/pipelines | Automated processes which run at intervals or events. Git platforms typically offer this functionality including extended logging, queueing, and manual job monitoring and interaction (start/stop). |

Main Sequence Diagram

Each harvester runs in a dedicated container. The result of the harvester is ingested into a (temporary) storage. Follow up processes (harmonization, augmentation, validation) pick up the results from the temporary storage.

flowchart LR

c[CI-CD] -->|task| q[/Queue\]

r[Runner] --> q

r -->|deploys| hc[Harvest container]

hc -->|harvests| db[(temporary storage)]

hc -->|data cleaning| db[(temporary storage)]

Integrations & Interfaces

The Automatic metadata harvesting component will show its full potential when being in the SWR tightly connected to (1) SWR Catalogue, (2) Metadata authoring and (3) ETS/ATS, i.e. test suites.

Key Architectural Decisions - Harvesting Strategy

OGC-CSW

Many (spatial) catalogues advertise their metadata via the catalogue Service for the Web standard, such as INSPIRE GeoPortal, Bonares, ISRIC.

CORDIS - OpenAire

Cordis does not capture many metadata properties. We harvest the title of a project publication and, if available, the DOI. In those cases where a resource is identified by a DOI, additional metadata can be found in OpenAire via the DOI. For those resources a harvester fetches additional metadata from OpenAire.

A second mechanism is available to link from Cordis to OpenAire, the RCN number. The OpenAire catalogue can be queried using an RCN filter to retrieve only resources relevant to a project. This work is still in preparation.

Not all DOI's registered in Cordis are available in OpenAire. OpenAire only lists resources with an open access license. Other DOI's can be fetched from the DOI registry directly or via Crossref.org. This work is still in preparation. Detailed technical information can be found in the technical description.

OpenAire and other sources

The software used to query OpenAire by DOI or by RCN is not limited to be used by DOIs or RCNs that come from Cordis. Any list of DOIs or list of RCNs can be handled by the software.

Risks & Limitations

Repository Storage

Introduction

Overview and Scope

The SoilWise repository aims at merging and seamlessly providing different types of content. To host this content and to be able to efficiently drive internal processes and to offer performant end user functionality, different storage options are implemented.

- A relational database management system for the storage of the core metadata of both data and knowledge assets.

- A Triple Store to store the metadata of data and knowledge assets as a graph, linked to soil health and related knowledge as a linked data graph.

- Git for storage of user-enhanced metadata.

Intended Audience

Storage is a backend component, therefore we only expect a maintenance role:

- SWC Administrator monitoring the health status, logs, signaling maintenance issues etc .

- SWC Maintainer performing corrective / adaptive maintenance tasks that require database access and updates.

PostgreSQL RDBMS: storage of raw and augmented metadata

Info

Current version: Postgres release 12.2;

Technology: Postgres

Access point: SQL

A "conventional" RDBMS is used to store the (augmented) metadata of data and knowledge assets. There are several reasons for choosing an RDBMS as the main source for metadata storage and metadata querying:

- An RDBMS provides good options to efficiently structure and index its contents, thus allowing performant access for both internal processes and end user interface querying.

- An RDBMS easily allows implementing constraints and checks to keep data and relations consistent and valid.

- Various extensions, e.g. search engines, are available to make querying, aggregations even more performant and fitted for end users.

Key Features

The Postgres database serves as a the destination and/or source for many of the backend processes of the SoilWise Catalogie. Its key features are:

- Raw metadata storage — The harvester process uses it to store the raw results of the metadata harvesting of the different resources that are currently connected.

- Storage of Augmented metadata — Various metadata augmentation jobs use it as input and write their input to this data store.

- Source for Search Index processing — This database is also the source for denormalisation, processing and indexing metadata through the Solr framework.

- Source for UI querying — While Solr is the main resource for end user querying through the catalogue UI, the catalogue also queries the Postgress database.

Virtuoso Triple Store: storage of SWR knowledge graph

Info

Current version: Virtuoso release 07.20.3239

Technology: Virtuoso

Access point: Triple Store (SWR SPARQL endpoint) https://repository.soilwise-he.eu/sparql

A Triple Store is implemented as part of the SWR infrastructure to allow a more flexible linkage between the knowledge captured as metadata and various sources of internal and external knowledge sources, particularly taxonomies, vocabularies and ontologies that are implemented as RDF graphs. Results of the harvesting and metadata augmentation that are stored in the RDBMS are converted to RDF and stored in the Triple Store.

Key Features

A Triple Store, implemented in Virtuoso, is integrated for parallel storage of metadata because it offers several capabilites:

- Semantic linkage — It allows the linking of different knowledge models, e.g. to connect the SWR metadata model with existing and new knowledge structures on soil health and related domains.

- Cross-domain reasoning — It allows reasoning over the relations in the stored graph, and thus allows connecting and smartly combining knowledge from those domains.

- Semantic querying — The SPARQL interface offered on top of the Triple Store allows users and processes to use such reasoning and exploit previously unconnected sets of knowledge.

Apache Lucene: Open-source search engine software library

Info

Current version: Apache Lucene release x.x.x

Technology: Apache Lucene

Access point: Via the Apache Solr API https://

The SoilWise Catalogie uses a dedicated index (Apache Lucene) to efficiently index and store the harvested and augmented metadata, as well as the knowledge extracted from documents referred to through the metadata records (currently only supporting PDF format). Access to the index (both indexing and querying) is provided through the Apache Solr search framework.

Key Features

Apache Lucene offers a range of options that support increasing the search performance and the quality of search results. It also allows to implement strategies for result ranking, faceted search etc. that can increase end user experience :

- Search performance — Apache Lucene is a broadly adopted and well maintaned search index that can dramatically speed up and improve the precision of search results

- Integration — Integration with Apache Solr provides tools and programmatic access to configure, manage, optimize and query the indexed content.

- Lexical Search — Combined with the Apache Solr framework, Lucene offers support for lexical search (based on matching the literals of words and their variants), faceted search and ranking.

- Semantic Search — Combined with the Apache Solr framework, Lucene offers support for generating and storing embeddings that support semantic search (based on the meaning of data) and associated AI functions.

Git: storage of code and configuration

Info

Technology: Gitlab and GitHub

Access point: https://github.com/soilwise-he

Git is a multi purpose environment for storing and managing software and documentation, versioning and configuration that also offers various functions the support the management and monitoring of the software development process.

Key Features

Git is an acknowledged platform to store, version, configure and docuemnt software, with additional features for software and software development management. The key features used in SoilWise are:

- Code storage, version and configuration management — Git is used to deposit and manage versions of Soilwise code, documentation and configurations.

- Issue and release management — SoilWIse uses the issue and release management to document, monitor and track the development of software conponents and their integration.

- Process automation — Git defines and runs automated pipelines for deployment, augmentation, validation and harvesting external sources.

Integrations & Interfaces

Key Architectural Decisions

| Decision | Rationale |

|---|---|

| RDF/Triple Store for semantics | Allows definition of advanced semantic structures and cross-domain interlnkage. Allows semantic reasoning, both internal and by external clients |

| To be further extended | ... |

Risks & Limitations

| Risk / Limitation | Description | Mitigation |

|---|---|---|

| Inconsistency between RDBMS and Triple Store | Parallel sources and query results might deviate if processes are not aligned. | Monitoring procedures and corrective actions to be documented for maintenance |

| Integration issues for Triple store | Lack of infrastructure and/or technical knowledge might hinder integration. | Continuous alignment with JRC technical team |

| Integration issues for process automation | Currently implemented process automation through Git might not fit JRC | Continuous alignment with JRC technical team |

| To be further extended | ... |

Metadata Catalogue

Info

Current version:

Technology: Apache Solr, React, pycsw

Project: Catalogue UI; Solr; pycsw

Access point: https://client.soilwise-he.containers.wur.nl/catalogue/search

Introduction

Overview and Scope

The Metadata Catalogue is a central piece of the architecture, giving access to individual metadata records. In the catalogue domain, various effective metadata catalogues are developed around the standards issued by the OGC, the Catalogue Service for the Web (CSW) and the OGC API Records, Open Archives Initiative (OAI-PMH), W3C (DCAT), FAIR science (Datacite) and Search Engine community (schema.org). For our first project iteration we've selected the pycsw software, which supports most of these standards. In the second iteration pycsw continues to provide standardized APIs, however to improve search performance and user experience, it was supplemented by Apache Solr and React frontend.

Intended Audience

The SoilWise Metadata Catalogue targets the following user groups:

- Soil scientists and researchers working with European soil health data and seeking catalogued knowledge, publications, and datasets.

- Living Labs' data scientists working with European soil health data and seeking catalogued knowledge, publications, and datasets.

- Mission Soil Project Data Managers searching for datasets published by other Mission Soil Projects, or veryfiing if their published data are recognized by SoilWise (EUSO).

- Policy Makers working with European soil health data and seeking catalogued knowledge, publications, and datasets.

Key Features

User interface

The SoilWise Metadata Catalogue adopts a React frontend, focusing on:

- Paginated search results - Search results are displayed per page in ranked order, in the form of overview table comprising preview of resource type, title, abstract, date and preview.

- Fulltext search - + autocomplete

- Resource type filter - enabling to filter out certain types of resources, e.g. journal articles, datasets, reports, software.

- Term filter - enabling to filter out resources containing certain keywords, resources published by specific projects, etc.

- Date filter - enabling to filter out resources based on their creation, or revision date

- Spatial filter - enabling to filter out resources based on their spatial extent using countries or regions, drawn bounding box, vicinity of user's location, or by searching for geographical names.

- Record detail view - After clicking result's table item, a record's detail is displayed at unique URL address to facilitate sharing. Record's detail currently comprises: record's type tag,full title, full abstract, keywords' tags, preview of record's geographical extent, record's preview image, if available, information about relevant HE funding project, list of source repositories,- indication of link availability, see Link liveliness assessment, last update date, all other record's items...

- Resource preview - 3 types of preview are currently supported: (1) Display resource geographical extent, which is available in the record's detail, as well in the search results list. (2) Display of a graphic preview (thumbnail) in case it is advertised in metadata. (3) Map preview of OGC:WMS services advertised in metadata enables standard simple user interaction (zoom, changing layers).

- Display results of metadata augmentation - Results of metadata augmentation are stored on a dedicated database table. The results are merged into the harvested content during publication to the catalogue. At the moment it is not possible to differentiate between original and augmented content. For next iterations we aim to make this distinction more clear.

- Display links of related information - Download of data "as is" is currently supported through the links section from the harvested repository. Note, "interoperable data download" has been only a proof-of-concept in the first iteration phase, i.e. is not integrated into the SoilWise Catalogue. Download of knowledge source "as is" is currently supported through the links section from the harvested repository.

Index and search strategies

The SoilWise Metadata Catalogue implements back-end index and search functions based on Apache Solr, focusing on:

- Denormalising metadata - Solr is set up as a document indexing infrastructure, working on rather "flat" textual formats instead of normalised database models. The first step is therefore a conversion to a denormalised structure, currently implemented as a (single) database view.

- Composing Solr documents - From the denormalised view, the individual metadata records are processed into Solr.documents.

- Transforming/Indexing - Solr uses transformers to process and index Solr.documents. This is a combination of sequential sub processes (e.g. tokenizers) and configurations that determine how the documents are indexed and how they can be searched, ranked, feceted etc.

- Search API - The Solr search API Allows query access to the Solr index, so the UI (and other clients) can search the metadata through the index.

Supported standards

In order to interact with the many relevant data communities, SoilWise aims to support a range of catalogue standards through pycsw backend, for more info see Integrations & Interfaces.

Architecture

Technological Stack

Backend

| Technology | Description |

|---|---|

| pycsw v3.0 | Pycsw, written in python, allows for the publishing and discovery of geospatial metadata via numerous APIs (CSW 2/CSW 3, OAI-PMH, providing a standards-based metadata and catalogue component of spatial data infrastructures. pycsw is Open Source, released under an MIT license, and runs on all major platforms (Windows, Linux, Mac OS X). |

| Apache Lucene v11.x | Apache Lucene is a open source high-performance Java-based search engine library. |

| Apache Solr v9.7.0 | Open source full text, vector and geo-spatial search framework on top of the Apache Lucene Index. |

| Java vx.x | |

| OpenStreetMaps API |

Frontend

| Technology | Description |

|---|---|

| React | Javascript framework that implements the search interface and access to Solr API |

| pycsw v3.0 | (depricated) Pycsw also offers its own User interface, which was used as a default in previous SoilWise prototype. |

Infrastructure

| Component | Technology |

|---|---|

| Container | Docker (multi-stage build, Eclipse Temurin JDK 21) |

| CI/CD | GitLab CI with semantic release (conventional commits) |

| Orchestration | Kubernetes (liveness/readiness probes) |

Main Component Diagram

Main Sequence Diagram

Integrations & Interfaces

| Service | Auth | Endpoint | Purpose |

|---|---|---|---|

| Catalogue Service for the Web (CSW) | https://repository.soilwise-he.eu/cat/csw | Catalogue service for the web (CSW) is a standardised pattern to interact with (spatial) catalogues, maintained by OGC. | |

| OGC API - Records | https://repository.soilwise-he.eu/cat/openapi | OGC is currently in the process of adopting a revised edition of its catalogue standards. The new standard is called OGC API - Records. OGC API - Records is closely related to Spatio Temporal Asset Catalogue (STAC), a community standard in the Earth Observation community. | |

| Protocol for metadata harvesting (OAI-PMH) | https://repository.soilwise-he.eu/cat/oaipmh | The open archives initiative has defined a common protocol for metadata harvesting (oai-pmh), which is adopted by many catalogue solutions, such as Zenodo, OpenAire, CKAN. The oai-pmh endpoint of Soilwise can be harvested by these repositories. | |

| Spatio Temporal Asset Catalog (STAC) | https://repository.soilwise-he.eu/cat/stac/openapi | ||

| OpenSearch | https://repository.soilwise-he.eu/cat/opensearch |

Key Architectural Decisions

Risks & Limitations

| Risk / Limitation | Description | Mitigation |

|---|---|---|

| Transferability | The differences in technology stack between the implementing consortium and the final owner (JRC) might lead to transferability and integration issues | Use of broadly adopted open source products. Alignment with JRC technical team |

| Metadata quality | The performance of the search functionality is highly dependent on the completeness and quality of the harvested metadata which is out of scope for SoilWise. | Metadata augmnentation will allow to partly mitigate |

| Transparency and explainability | The dependency on metadata completeness and quality in combination with the large amount of interdependent options for (fuzzy) search strategies and the different combinations of UI search features will make it hard to understand the logic behind search results. | Documentation of metadata augmentation, search strategies etc. |

| Usability | The diversity of user groups and their requirements and expectations make it difficult to find balance between functionality/complexity/user-friendliness. | Iterative appraoch and validation/testing with user groups to align. |

Soil Companion (chatbot)

Info

Current version: 1.2.x

Technology: Retrieval Augmented Generative Artificial Intelligence

Project: Soil Companion

Access point: https://soil-companion.containers.wur.nl/app/index.html

Introduction

Overview and Scope

The Soil Companion is an AI chatbot developed in the SoilWise project. It provides an intelligent conversational interface through which users can explore European soil metadata, query global and country-specific soil data services, and receive answers grounded in the SoilWise knowledge repository.

The chatbot uses an agentic tool-calling approach: a large language model (LLM) autonomously decides which external data sources to consult for each question, executes the relevant tool calls, and synthesizes the results into a coherent response. Answers are enriched with auto-generated links to SoilWise vocabulary terms and Wikipedia articles. A sidebar Insight panel displays related SKOS vocabulary concepts and clickable chips that allow users to explore connected topics.

Intended Audience

The Soil Companion targets the following user groups:

- Soil scientists and researchers working with European soil health data and seeking catalogued knowledge, publications, and datasets from the SoilWise repository.

- Agricultural experts and extension officers looking for soil property data, field-level KPIs, and crop information to inform land management decisions.

- Students and educators exploring soil science concepts through a conversational interface that provides definitions, vocabulary hierarchies, and links to authoritative sources.

- Farmers and land managers (in selected regions) who want accessible field-level agricultural data such as crop history, soil physical properties, and greenness indices.

Key Features

The chatbot combines agentic LLM tool calling with retrieval-augmented generation and post-response enrichment to deliver grounded, linked answers. The key features are:

- Agentic tool calling — The LLM autonomously decides which of the available tool integrations to invoke (catalog search, SoilGrids, AgroDataCube, Wikipedia, vocabulary SPARQL), executing up to 10 sequential tool-call iterations per query.

- RAG from local core knowledge — Documents (PDF, text, Markdown) are split into chunks, embedded with a local model (AllMiniLmL6V2), and stored in memory. Relevant chunks are retrieved by cosine similarity and injected into the prompt.

- Response enrichment — After the LLM generates a response, auto-linkers scan for vocabulary terms and Wikipedia article titles, inserting navigable links into the rendered output.

- Insight panel — The frontend extracts SoilWise and Wikipedia links from responses and displays broader/narrower/related vocabulary concepts with definitions in a sidebar panel.

- Token streaming — Responses are streamed token-by-token over WebSocket, giving users immediate visual feedback.

- Feedback loop — Thumbs up/down ratings are logged to daily JSONL files; evaluation tools compute quality metrics (like rate, NSAT, Wilson lower bound).

Architecture

Technological Stack

Backend (JVM)

| Component | Technology |

|---|---|

| Language | Scala 3.8.x on JDK 17+ (tested 17–25) |

| Build | SBT 1.11.x / 1.12.x (cross-build JS/JVM) |

| LLM Framework | LangChain4j 1.10.x (OpenAI integration, agentic tool calling, embeddings, RAG) |

| LLM Provider | OpenAI (gpt-4o-mini for chat, gpt-4o for reasoning, text-embedding-3-small for embeddings) |

| Local Embeddings | AllMiniLmL6V2 (offline, ~33 MB model for RAG document retrieval) |

| Vector Store | In-memory embedding store (with experimental Chroma support) |

| Logging | SLF4J 3.0.x + Logback 1.5.x (daily rotation, 30-day retention) |

| Document Parsing | Apache Tika (PDF, text, Markdown) |

Frontend (Browser)

| Component | Technology |

|---|---|

| Language | Scala.js (compiled to JavaScript) |

| Maps | Leaflet 1.9.x |

| Communication | WebSocket (real-time streaming) |

Infrastructure

| Component | Technology |

|---|---|

| Container | Docker (multi-stage build, Eclipse Temurin JDK 21) |

| CI/CD | GitLab CI with semantic release (conventional commits) |

| Orchestration | Kubernetes (liveness/readiness probes) |

Main Components Diagram

High-level component overview:

graph TD

subgraph Browser ["Browser (Scala.js)"]

App["SoilCompanionApp

- WebSocket client (real-time chat streaming)

- Authentication & session management

- Location picker (Leaflet map)

- Insight panel (vocabulary concepts, Wikipedia links)

- File upload, feedback, theme toggle"]

end

Browser -- "WebSocket + HTTP" --> Server

subgraph Server ["SoilCompanionServer (Cask, JVM)"]

direction TB

Routes["Routes: /healthz, /readyz, /login, /logout, /session,

/subscribe/:id (WS), /query, /clear, /upload,

/feedback, /location, /vocab, /app/*"]

subgraph Internal_Modules [" "]

direction LR

Config["Config

(PureConfig)"]

Logger["Feedback

Logger"]

SessMgmt["Session Management

(ConcurrentHashMaps)"]

end

subgraph Assistant ["Assistant (per session)"]

AIServices["LangChain4j AiServices

- StreamingChatModel (OpenAI)

- ChatMemory (50 messages)

- RAG ContentRetriever (embeddings + local docs)

- Tool methods (5 integrations)"]

end

subgraph PostLinking ["(post-response linking)"]

direction LR

VL[VocabLinker]

WL[WikipediaLinker]

end

end

Server -- "HTTP calls" --> External

subgraph External ["External Services"]

direction TB

E1["OpenAI API

(LLM, embed)"]

E2["Solr

(catalog)"]

E3["ISRIC SoilGrids v2.0

(global soil properties)"]

E4["SoilWise

SPARQL"]

E5["Wikipedia

(6 langs)"]

E6["WUR AgroDataCube v2

(NL field data)"]

end

%% Styling

style Browser fill:#f9f9f9,stroke:#333,stroke-width:2px

style Server fill:#fff,stroke:#333,stroke-width:2px

style External fill:#f9f9f9,stroke:#333,stroke-width:2px

style Assistant fill:#fff,stroke:#333,stroke-dasharray: 5 5

style Internal_Modules fill:none,stroke:none

style PostLinking fill:none,stroke:none

Main Sequence Diagram

User query to response flow:

sequenceDiagram

autonumber

participant C as Client (Browser)

participant S as Server (JVM)

participant E as External APIs

Note over C, S: Session Initialization

C->>S: GET /session

S-->>C: { sessionId: UUID }

C->>S: WS /subscribe/:sessionId

Note right of S: store connection

S-->>C: connection established

C->>S: POST /login

Note right of S: validate credentials

S-->>C: { ok: true }

Note over C, S: Chat Interaction

C->>S: POST /query

{ sessionId, content }

S-->>C: QueryEvent("received")

Note right of S: generate questionId

S-->>C: QueryEvent("thinking")

S-->>C: QueryEvent("retrieving_context")

S->>E: RAG: embed query

E-->>S: top-5 document chunks

S->>E: LLM: evaluate tools (OpenAI)

E-->>S: tool call decision

S->>E: Tool: e.g. Solr search (Solr)

E-->>S: search results

S->>E: LLM: synthesize answer (OpenAI)

E-->>S: token stream begins

loop Token Streaming

S-->>C: QueryPartialResponse(token)

end

Note right of S: stream complete

Apply VocabLinker

Apply WikipediaLinker

S-->>C: QueryEvent("links_added", linkedResponse)

S-->>C: QueryEvent("done")

Note left of C: render markdown,

show feedback buttons

C->>S: POST /feedback

{ questionId, vote }

Note right of S: log to JSONL

Database Design

The Soil Companion does not use a traditional database. All runtime state is held in-memory; only feedback and application logs are persisted to disk.

In-memory state (per server process):

| Store | Type | Purpose |

|---|---|---|

wsConnections |

ConcurrentHashMap[String, WsChannelActor] |

Active WebSocket connections |

assistants |

ConcurrentHashMap[String, Assistant] |

LLM chat state per session |

uploadedTexts |

ConcurrentHashMap[String, String] |

Temporary uploaded file content |

uploadedFilenames |

ConcurrentHashMap[String, String] |

Original filenames of uploads |

locationContexts |

ConcurrentHashMap[String, String] |

Location JSON per session |

authenticatedSessions |

ConcurrentHashMap[String, Boolean] |

Authentication status |

lastActivity |

ConcurrentHashMap[String, Long] |

Session inactivity tracking |

In-memory vector store:

| Store | Type | Purpose |

|---|---|---|

embeddingStore |

InMemoryEmbeddingStore[TextSegment] |

Embedded knowledge document chunks |

Documents from the data/knowledge/ directory are loaded, split into 500-character chunks (100-character overlap), embedded using AllMiniLmL6V2, and stored at startup.

Persistent file storage:

| Data | Location | Format |

|---|---|---|

| Feedback | data/feedback-logs/feedback-YYYY-MM-DD.jsonl |

Daily JSONL, auto-rotated, gzip compressed |

| Application logs | data/logs/soil-companion.log |

Logback rolling file (30-day retention, gzip) |

| Knowledge documents | data/knowledge/ |

PDF, text, Markdown (read-only at startup) |

| Vocabulary | data/vocab/soilvoc_concepts_*.csv |

CSV (loaded at startup for auto-linking) |

Integrations & Interfaces

| Service | Auth | Endpoint | Purpose |

|---|---|---|---|

| OpenAI API | Bearer token (OPENAI_API_KEY) |

via LangChain4j | Chat completion (gpt-4o-mini), reasoning (gpt-4o), embeddings (text-embedding-3-small) |

| Solr (SoilWise Catalog) | Basic Auth (SOLR_USERNAME / SOLR_PASSWORD) |

SOLR_BASE_URL |

Search datasets and publications; full-text content retrieval |

| ISRIC SoilGrids v2.0 | None (public) | SOILGRIDS_BASE_URL |

Soil property estimates at lat/lon (~250 m resolution) |

| SoilWise SPARQL | None | VOCAB_SPARQL_ENDPOINT |

SKOS concept hierarchies (broader, narrower, related terms) |

| Wikipedia | None (public) | WIKIPEDIA_BASE_URL (per language) |

Article search and content retrieval (6 languages) |

| WUR AgroDataCube v2 | Token header (AGRODATACUBE_ACCESS_TOKEN) |

AGRODATACUBE_BASE_URL |

NL field parcels, crop history, soil/crop KPIs |

All external service credentials and endpoints are configured through HOCON (application.conf) with environment variable overrides.

HTTP endpoints exposed by the server:

| Method | Path | Purpose |

|---|---|---|

GET |

/healthz |

Liveness probe (version, uptime) |

GET |

/readyz |

Readiness probe (config + API key checks) |

POST |

/login |

Demo authentication |

POST |

/logout |

Session teardown |

GET |

/session |

New session ID |

WS |

/subscribe/:sessionId |

WebSocket for streaming chat |

POST |

/query |

Submit a question |

POST |

/clear |

Clear chat history |

POST |

/upload |

Upload text/Markdown context |

POST |

/feedback |

Submit thumbs up/down |

POST |

/location |

Set geographic context |

POST |

/vocab |

Batch vocabulary concept lookup |

GET |

/app/* |

Static frontend assets |

WebSocket event types:

| Event | Direction | Purpose |

|---|---|---|

received |

Server → Client | Query acknowledged, questionId assigned |

thinking |

Server → Client | LLM is analysing the question |

retrieving_context |

Server → Client | RAG retrieval in progress |

generating |

Server → Client | LLM is generating the answer |

links_added |

Server → Client | Auto-linked response replacing the streamed version |

done |

Server → Client | Response complete |

error |

Server → Client | An error occurred |

heartbeat |

Server → Client | Keep-alive (every 15 seconds) |

session_expired |

Server → Client | Session timed out due to inactivity |

prompt_truncated |

Server → Client | Input was truncated to stay within limits |

QueryPartialResponse |

Server → Client | Single streamed token |

Key Architectural Decisions

| Decision | Rationale |

|---|---|

| Scala 3 + Scala.js cross-build | Enables shared domain models (QueryRequest, QueryEvent, QueryPartialResponse) between backend and frontend, eliminating serialization mismatches and reducing code duplication. |

| Cask HTTP micro-framework | Lightweight, Scala-native server with built-in WebSocket support. Suitable for a single-service chatbot without the overhead of a full application framework. |

| LangChain4j for LLM integration | Provides a mature JVM-native abstraction for tool calling, RAG, streaming, and chat memory — avoiding the need to call OpenAI APIs directly. The @Tool annotation enables declarative tool registration. |

| In-memory embedding store | Simplifies deployment (no external vector database required). Sufficient for the current knowledge base (~5 documents). Trade-off: state is lost on restart and capacity is limited by server memory. |

| AllMiniLmL6V2 for local embeddings | Runs offline without API calls, keeping RAG retrieval fast and cost-free. The ~33 MB model is small enough to bundle in the Docker image. |

| Per-session Assistant instances | Each session gets its own Assistant with isolated chat memory, tool state (e.g. AgroDataCube field context), and uploaded file context. Prevents cross-session contamination. |

| Post-response auto-linking | VocabLinker and WikipediaLinker run after the LLM completes, replacing the streamed response with an enriched version. This avoids asking the LLM to generate links (which is unreliable) while still providing navigable references. |

| WebSocket token streaming | Provides immediate visual feedback during LLM generation, reducing perceived latency. A 15-second heartbeat prevents proxy/ingress idle timeouts. |

| Environment variable configuration | All credentials and endpoints are overridable via environment variables, following 12-factor app principles for containerized deployment. |

| Demo authentication | A simple single-user mode enables local development and demonstrations without requiring an external identity provider. Production deployment would integrate with an external auth layer. |

Risks & Limitations

| Risk / Limitation | Description | Mitigation |

|---|---|---|

| SoilGrids accuracy | Returned values are modelled estimates at ~250 m grid resolution, not field measurements. | Tool responses include explicit disclaimers advising users to verify with local data. |

| Single-user demo auth | The demo authentication mode uses a single configurable account with no roles or authorization. Not suitable for production multi-user scenarios. | Designed for development/testing; production deployment requires integration with an external authentication and authorization layer. |

| In-memory state loss | All session state, chat memory, uploaded context, and the embedding store are lost on server restart. | Acceptable for a demo/chatbot use case. Persistent vector store (Chroma) support exists experimentally for future use. |

| No horizontal scaling | All sessions are held in a single JVM process with no shared session store. | Sufficient for current usage levels. Horizontal scaling would require an external session store and load balancer. |

| External API availability | The chatbot depends on multiple external APIs (Solr, SoilGrids, AgroDataCube, OpenAgroKPI, Wikipedia). Downtime or rate limits on any service degrades functionality. | Tool methods handle errors gracefully, returning informative messages. The LLM can fall back to other tools if one fails. LangChain4j provides configurable retry logic (max 3 retries). |

| Geographic coverage | AgroDataCube and OpenAgroKPI are Netherlands-only. SoilGrids is global but at coarse resolution. | Tool descriptions inform the LLM of geographic scope so it can communicate limitations to users. |

| Knowledge base is static | Local documents are loaded and embedded only at startup. No hot-reload mechanism exists. | A server restart picks up new documents. This is acceptable for infrequently changing knowledge resources. |

| LLM hallucination | Despite RAG grounding and tool results, the LLM may still generate inaccurate statements. | System prompts instruct the model to include disclaimers and prefer tool-grounded answers. User feedback collection enables ongoing quality monitoring. |

| Prompt injection via uploads | Uploaded files and location contexts could contain adversarial content. | Input sanitization is applied; prompt size is capped at 120,000 characters; file uploads are limited to 200 KB. |

| CORS policy | The file upload endpoint uses a permissive Access-Control-Allow-Origin: * header. |

Acceptable for demo deployment; should be tightened for production. |

Data publication support

Introduction

Overview and Scope

At the moment, SoilWise supports data publishers with the following tools:

- DOI Resolution Widget

- Tabular Soil Data Annotation to help users create semantic metadata for tabular datasets.

- INSPIRE Geopackage Transformation

- Soil Vocabulary Viewer, part of the Knowledge Graph component, visualizes and links different soil-domain vocabularies and terms.

Intended Audience

DOI Resolution Widget

Info

Current version:

Technology:

Project:

Access Point:

Overview and Scope

Key Features

Architecture

Technological Stack

Main Sequence Diagram

Integrations & Interfaces

Key Architectural Decisions

Risks & Limitations

Tabular Soil Data Annotation

Info

Current version:

Technology: Streamlit, Python, OpenAI API

Project: Tabular Data Annotator

Access Point: https://dataannotator-swr.streamlit.app/

Overview and Scope

DataAnnotator is a Streamlit-based web application designed to help users create semantic metadata for tabular datasets. It combines optional Large Language Model (LLM) assistance with semantic embeddings to annotate data columns with machine-readable descriptions, element definitions, units, methods, and vocabulary mappings.

The tool addresses the metadata annotation workflow by:

- Enabling manual annotation: Users directly enter descriptions for data columns

- Automating description generation (optional): If users have context documentation, LLMs can help extract and structure descriptions automatically

- Linking to vocabularies: Semantic embeddings match descriptions to controlled vocabularies for standardization

The LLM layer is optional—users can skip automated generation and manually provide descriptions, which the system will then semantically match to existing vocabulary terms.

Key Features

| Feature | Implementation | Purpose |

|---|---|---|

| Auto Type Detection | Statistical sampling | Identify data patterns |

| Manual Description Entry | Streamlit text inputs | Direct user annotation |

| Optional LLM Assistance | OpenAI/Apertus integration | Auto-extract descriptions from docs |

| Semantic Vocabulary Matching | FAISS vector search | Link descriptions to standard vocabularies |

| Context Awareness | PDF/DOCX import + prompting | Extract domain-specific info when available |

| Multi-format Export | flat csv/TableSchema/CSVW | Integration with downstream tools |

Architecture

Technological Stack

| Component | Technology |

|---|---|

| Frontend | Streamlit 1.51+ |

| Backend Logic | Python 3.12+ |

| LLM Integration | OpenAI API, Apertus HTTP |

| Embeddings | Sentence Transformers 5.1+ |

| Vector Search | FAISS (CPU) 1.12+ |

| File Parsing | PyPDF2, python-docx, openpyxl |

| ML Libraries | scikit-learn, NumPy |

Dependencies & Models

-

Python Packages

streamlit>=1.51.0- Web UI frameworkopenai>=2.7.2- LLM API clientsentence-transformers>=5.1.2- Semantic embeddingfaiss-cpu>=1.12.0- Vector similarity searchpandas>=2.0- Data manipulationopenpyxl>=3.1.5- Excel handlingpython-docx>=1.2.0- Word document parsingpypdf2>=3.0.1- PDF text extraction

-

Pre-trained Models/Data

- Embedding Model:

all-MiniLM-L6-v2(384 dimensions, 22M parameters) - FAISS Index: Pre-computed vocabulary embeddings (stored in

data/directory)

- Embedding Model:

Main Components

1. Data Input Module

- Supported Formats - Tabular Data: CSV, Excel (XLSX)

- Supported Formats - Context Documents: Free-form text input, PDF documents, DOCX files

- Processing Functions:

read_csv_with_sniffer(): Auto-detects CSV delimitersimport_metadata_from_file(): Reads existing metadata if providedread_context_file(): Extracts context from PDFs/DOCX for LLM-assisted annotation

2. Data Analysis & Type Detection

- Function:

detect_column_type_from_series() - Detects:

- String: Text data

- Numeric: Integers and floats

- Date: Temporal values

- Approach: Statistical sampling of column values (up to 200 non-null entries)

3. Metadata Framework

- Function:

build_metadata_df_from_df() - Template Fields:

name: Column identifierdatatype: Type classification (string/numeric/date)element: Semantic element definitionunit: Measurement unitmethod: Collection/calculation methoddescription: Human-readable descriptionelement_uri: Link to external vocabulary

4. LLM Integration Layer (Optional)

Purpose: Automate the extraction and structuring of descriptions from existing documentation when users have context materials.

When to Use: - User has documentation (PDFs, Word docs, etc.) describing variables - Manual annotation is time-consuming for large datasets - Descriptions need to be extracted from unstructured text

Supported Providers:

-

OpenAI (Recommended)

- Uses GPT models for high-quality response generation

get_response_OpenAI(): Direct API calls- Best for complex, domain-specific text extraction

-

Apertus (Alternative)

- Self-hosted LLM option

get_response_Apertus(): HTTP-based endpoint- Swiss-based open-source model

Functionality:

- Function:

generate_descriptions_with_LLM() - Inputs:

- Variable names to describe

- Context information from documents or text input

- Output Format: Structured JSON with descriptions for each variable

5. Semantic Embedding & Vocabulary Matching

- Model: Sentence Transformers (default:

all-MiniLM-L6-v2) - Functions:

load_sentence_model(): Load embedding modelload_vocab_indexes(): Load pre-computed FAISS indexesembed_vocab(): Generate embeddings with optional definition weighting

- Purpose: Match generated or manually-entered descriptions to controlled vocabularies

Pre-computed Vocabulary Sources

The FAISS vectorstore was pre-computed by embedding terms from four major public vocabularies:

| Vocabulary | Domain | Source |

|---|---|---|

| Agrovoc | Agricultural and food sciences | FAO - Food and Agriculture Organization |

| GEMET | Environmental terminology | European Environment Agency (EEA) |

| GLOSIS | Soil science and properties | FAO Global Soil Information System |

| ISO 11074:2005 | Soil quality terminology | International Organization for Standardization |

This multi-vocabulary approach enables annotation of diverse datasets including agricultural, environmental, and soil-related data.

FAISS Index Structure:

Index File: vocabCombined-{modelname}.index

Metadata File: vocabCombined-{modelname}-meta.npz

Metadata Dictionary Format:

{

index_id: {

"uri": "vocabulary_uri",

"label": "preferred_label",

"definition": "term_definition",

"QC_label": "prefLabel|altLabel"

},

...

}

6. Export & Download Module

- Function:

download_bytes() - Supported Formats:

- Excel (XLSX) - for human review

- JSON - for machine processing

- CSV - for spreadsheet tools

- Implementation: Streamlit session-based download management

Main Sequence Diagram

graph TB

A["User Interface

Streamlit App"] -->|Upload Data| B["Data Input Handler

CSV/Excel/PDF"]

B -->|Parse Data| C["Data Analysis

Column Type Detection"]

C -->|Analyze Structure| D["Metadata Framework

Build Template"]

D --> E{"Description Source?"}

E -->|Manual Entry| F["User Provides

Descriptions"]

E -->|Optional: Auto-generate| I[/"LLM Provider

(Optional Tool)"\]

I -->|OpenAI API| J["OpenAI Client

GPT Models"]

I -->|Apertus API| K["Apertus Client

Local LLM"]

J -->|JSON Descriptions| L["Response Parser

JSON Extraction"]

K -->|JSON Descriptions| L

L --> M["LLM-generated

Descriptions"]

F --> N["Semantic Embedding

Sentence Transformers"]

M --> N

N -->|Vector Query| O["proposal for generalized ObservedProperty"]

V1["Agrovoc

Agricultural"] -.->|Pre-embedded| G["FAISS vector store"]

V2["GEMET

Environmental"] -.->|Pre-embedded| G

V3["GLOSIS

Soil Science"] -.->|Pre-embedded| G

V4["ISO 11074:2005

Soil Quality"] -.->|Pre-embedded| G

G --> O

O -->Q["Export Handler

flat csv/TableSchema/CSVW"]

Q -->|Downloaded metadata| A

Key Architectural Decisions

Optimization Strategies:

- Model Caching: Streamlit

@st.cache_resourcefor persistent model loading - API Caching: JSON-based result memoization to avoid redundant API calls

- FAISS Optimization: Pre-computed indexes for O(log n) vector search

- Batch Processing: Process multiple columns in single LLM call

INSPIRE Geopackage Transformation

Info

Current version:

Technology:

Project:

Access Point:

Overview and Scope

Key Features

User Manual

Architecture

Technological Stack

Main Sequence Diagram

Database Design

Integrations & Interfaces

Key Architectural Decisions

Risks & Limitations

Other recommended tools acknowledged by SoilWise community

The following components are not a product of SoilWise project, and not an integral part of the SoilWise Catalogue, but are recommended by the SoilWise community.

Hale Studio

A proven ETL tool optimised for working with complex structured data, such as XML, relational databases, or a wide range of tabular formats. It supports all required procedures for semantic and structural transformation. It can also handle reprojection. While Hale Studio exists as a multi-platform interactive application, its capabilities can be provided through a web service with an OpenAPI.

User Manual

A comprehensive tutorial video on soil data harmonisation with hale studio can be found here.

Setting up a transformation process in hale»connect

Complete the following steps to set up soil data transformation, validation and publication processes:

- Log into hale»connect.

- Create a new transformation project (or upload it).

- Specify source and target schemas.

- Create a theme (this is a process that describes what should happen with the data).

- Add a new transformation configuration. Note: Metadata generation can be configured in this step.

- A validation process can be set up to check against conformance classes.

Executing a transformation process

- Create a new dataset and select the theme of the current source data, and provide the source data file.

- Execute the transformation process. ETF validation processes are also performed. If successful, a target dataset and the validation reports will be created.

- View and download services will be created if required.

To create metadata (data set and service metadata), activate the corresponding button(s) when setting up the theme for the transformation process.

Metadata Validation

Introduction

Metadata should help users assess the usability of a data set for their own purposes and help users to understand their quality. Assessing the quality of metadata may guide the stakeholders in future governance of the system.

Overview and Scope

In terms of metadata, SoilWise Repository aims for the approach to balance harvesting between quantity and quality. See for more information in the Harvester Component. Catalogues which capture metadata authored by various data communities typically have a wide range of metadata completion and accuracy. Therefore, the SoilWise Repository employs metadata validation mechanisms to provide additional information about metadata completeness, conformance and integrity. Information resulting from the validation process are stored together with each metadata record in a relation database and updated after registering a new metadata version. Within the first iteration, they are not displayed in the SoilWise Catalogue.

On this topic 2 components are available which monitor aspects of metadata

- Metadata completeness calculates a score based on selected populated metadata properties

- Metadata INSPIRE compliance

- Link Liveliness Assessment part of Metadata Augmentation components, validates the availability of resources described by the record

Intended Audience

The SoilWise Metadata Validation targets the following user groups:

- JRC data analysts monitoring the metadata quality of published soil-related datasets.

Metadata completeness

Info

Current version: 0.2.0

Technology: Python

Project: Metadata validator

Access point: Postgres database

The software calculates a level of completeness of a record, indicated in % of 100 for endorsed properties, considering that some properties are conditional based on selected values in other properties.

Completeness is evaluated against a set of metadata elements for each harmonized metadata record in the SWR platform. Records for which harmonisation fails are not evaluated (nor imported).

| Label | Description | Score |

|---|---|---|

| Identification | Unique identification of the dataset (A UUID, URN, or URI, such as DOI) | 10 |

| Title | Short meaningful title | 20 |

| Abstract | Short description or abstract (1/2 page), can include (multiple) scientific/technical references | 20 |

| Author/Organisation | An entity responsible for the resource (e.g. person or organisation) | 20 |

| Date | Last update date | 10 |

| Type | The nature of the content of the resource | 10 |

| Rights | Information about rights and licences | 10 |

| Extent (geographic) | Geographical coverage (e.g. EU, EU & Balkan, France, Wallonia, Berlin) | 5 |

| Extent (temporal) | Temporal coverage | 5 |

Architecture

Technologies Stack

The methodology of ETS/ATS has been suggested to develop validation tests.

Abstract Executable Test Suites (ATS) define a set of abstract test cases or scenarios that describe the expected behaviour of metadata without specifying the implementation details. These test suites focus on the logical aspects of metadata validation and provide a high-level view of metadata validation requirements, enabling stakeholders to understand validation objectives and constraints without getting bogged down in technical details. They serve as a valuable communication and documentation tool, facilitating collaboration between metadata producers, consumers, and validators. ATS are often documented using natural language descriptions, diagrams, or formal specifications. They outline the expected inputs, outputs, and behaviours of the metadata under various conditions.

The SWR ATS is under development at https://github.com/soilwise-he/metadata-validator/blob/main/docs/abstract_test_suite.md

Executable Test Suites (ETS) are sets of tests designed according to ATS to perform the metadata validation. These tests are typically automated and can be run repeatedly to ensure consistent validation results. Executable test suites consist of scripts, programs, or software tools that perform various validation checks on metadata. These checks can include:

- Data Integrity: Checking for inconsistencies or errors within the metadata. This includes identifying missing values, conflicting information, or data that does not align with predefined constraints.

- Standard Compliance: Assessing whether the metadata complies with relevant industry standards, such as Dublin Core, MARC, or specific domain standards like those for scientific data or library cataloguing.

- Interoperability: Evaluating the metadata's ability to interoperate with other systems or datasets. This involves ensuring that metadata elements are mapped correctly to facilitate data exchange and integration across different platforms.

- Versioning and Evolution: Considering the evolution of metadata over time and ensuring that the validation process accommodates versioning requirements. This may involve tracking changes, backward compatibility, and migration strategies.

- Quality Assurance: Assessing the overall quality of the metadata, including its accuracy, consistency, completeness, and relevance to the underlying data or information resources.

- Documentation: Documenting the validation process itself, including any errors encountered, corrective actions taken, and recommendations for improving metadata quality in the future.

ETS is currently implemented through Hale Connect instance and as a locally running prototype of GeoNetwork instance.

Main Sequence Diagram

Metadata INSPIRE compliance

Info

Current version: 0.2.0

Technology: Esdin Test Framework (ETF), Python

Project: Metadata validator

Access point: Postgres database

Compliance to a given standard is an indicator for (meta)data quality. This indicator is measured on datasets claiming to confirm to the INSPIRE regulation. This validation is performed on non augmented, non harmonised metadata records. The observed indicator is stored on the augmentation table. The Esdin Test Framework is used combined with metadata validation rules from the INSPIRE community.

Regarding the INSPIRE validation, all metadata records with the source property value equal to INSPIRE are validated against INSPIRE validation. In total 506 metadata records were harvested from the INSPIRE Geoportal.

For this case, the INSPIRE Reference Validator was used. Validator is based on INSPIRE ATS and is available as a validation service. For the initial validation, INSPIRE metadata were harvested to the local instance of GeoNetwork, which allows on the fly validation of metadata using external validation services (including INSPIRE Reference Validator). Metadata were dowloaded from the PostgreSQL database and uploaded to the local instance of GeoNetwork, where the XML validation and INSPIRE validation were executed. Two validation runs were executed: one to check consistency of metadata using XSD and Schematron (using templates for ISO 19115 standard for spatial data sets and ISO 19119 standard for services), the second for validation of metadata records using INSPIRE ETS.

Architecture

Technologies Stack

| Technology | Description |

|---|---|

| Python | Used for the linkchecker integration, API development, and database interactions. |

| PostgreSQL | Primary database for storing and managing link information. |

| CI/CD | Automated pipeline for continuous integration and deployment, with scheduled weekly runs for link liveliness assessment. |

| Esdin Test Framework | Opensource validation framework, commonly used in INSPIRE. |

Main Sequence Diagram

Database Design

Results of metadata validation are stored on PostgreSQL database, table is called validation in a schema validation.

| identifier | Score | Date |

|---|---|---|

| abc-123-cba | 60 | 2025-01-20T12:20:10Z |

Validation runs every week as a CI-CD pipeline on records which have not been validated for 2 weeks. This builds up a history to understand validation results over time (consider that both changes in records, as well as the ETS itself may cause differences in score).

Integrations & Interfaces

Metadata Augmentation

Introduction

Overview and Scope

This set of components augments metadata statements using various techniques. Augmentations are stored on a dedicated augmentation table, indicating the process which produced it. The statements are combined with the ingested content to offer users an optimal catalogue experience.

At the moment, Metadata Augmentation functionality is covered by the following components:

- Keyword Matcher

- Element Matcher

- Translation Module

- Link Liveliness Assessment

- Spatial Metadata Extractor

- Spatial Locator

- Metadata Interlinker

Upcoming components

Intended Audience

Metadata Augmentation is a backend component providing outputs, which users can see displayed in the Metadata Catalogue. Therefore the Intended Audience corresponds to the one of the Metadata Catalogue. Additionally we expect a maintenance role:

- SWC Administrator monitoring the augmentation processes, access to history, logs and statistics. Administrators can manually start a specific augmentation process.

Keyword matcher

Info

Current version: 0.2.0

Technology: Python

Release: https://doi.org/10.5281/zenodo.14924181

Projects: Keyword matcher

Overview and Scope

Keywords are an important mechanism to filter and cluster records. Similar keywords need to be clustered to be able to match them. This module evaluates keywords of existing records to make them equal in case of high similarity.

Analyses existing keywords on a metadata record. Two cases can be identified: